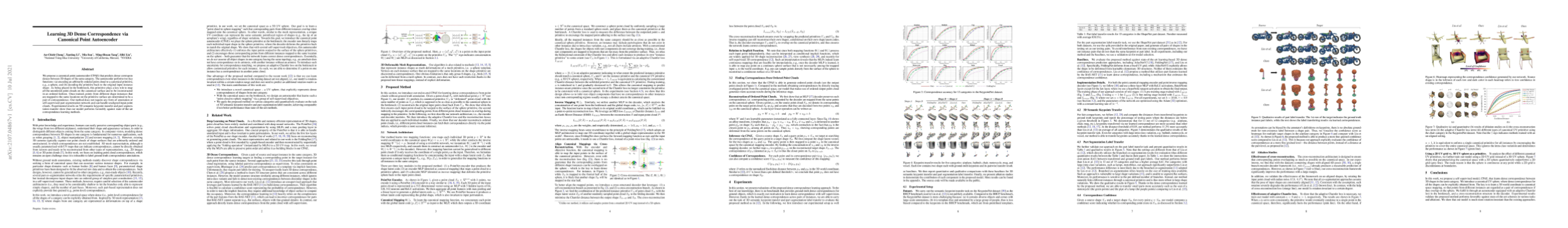

We propose a canonical point autoencoder (CPAE) that predicts dense correspondences between 3D shapes of the same category. The autoencoder performs two key functions: (a) encoding an arbitrarily ordered point cloud to a canonical primitive, e.g., a sphere, and (b) decoding the primitive back to the original input instance shape. As being placed in the bottleneck, this primitive plays a key role to map all the unordered point clouds on the canonical surface and to be reconstructed in an ordered fashion. Once trained, points from different shape instances that are mapped to the same locations on the primitive surface are determined to be a pair of correspondence. Our method does not require any form of annotation or self-supervised part segmentation network and can handle unaligned input point clouds. Experimental results on 3D semantic keypoint transfer and part segmentation transfer show that our model performs favorably against state-of-the-art correspondence learning methods.

Overview

Keypoint Transfer Results

Here we show our keypoint transfer results. The source shape is at the leftmost, and the rest of the shapes are the target shapes.

Source

Targets

Part Label Transfer Results

Here we show our part label transfer results. The source shape is at the leftmost, and the rest of the shapes are the target shapes.

Source

Targets

Correspondence Confidence Results

Given a source shape and a target shape, for every point in the target shape, our model computes a confidence score indicating whether its corresponding point exists in the source shap. We visualize our correspondence confidence results in the Motorcycle category using heatmap, where dark color in each heatmap refers to low confidence in existing correspondence.

Source

Targets

Texture Transfer Results

Thanks to the learned dense and accurate correspondence, we can transfer the texture from a source shape to an arbitrary target shape, without any form of annotation.